Background

Over the next few months I’m intending to have a series of posts on recent developments in MCMC and algorithms of Metropolis-Hastings type. These posts will assume a basic familiarity with stochastic simulation and R. For reference, I have some old notes on stochastic simulation and MCMC from a course I used to teach. There were a set of computer practicals associated with the course which focused on understanding the algorithms, mainly using R. Indeed, this post is going to make use of the example from this old practical. If anything about the rest of this post doesn’t make sense, you might need to review some of this background material. My favourite introductory text book on this subject is Gamerman’s Markov Chain Monte Carlo. In this post I’m going to briefly recap the key idea behind the Metropolis-Hastings algorithm, and illustrate how these kinds of algorithms are typically implemented. In order to keep things as simple as possible, I’m going to use R for implementation, but as discussed in a previous post, that probably won’t be a good idea for non-trivial applications.

Metropolis-Hastings

The motivation is a need to understand a complex probability distribution, p(x). In applications to Bayesian statistics, this distribution is usually a posterior from a Bayesian analysis. A good way to understand an intractable distribution is to simulate realisations from it and study those. However, this often isn’t easy, either. The idea behind MCMC is to simulate a Markov chain whose equilibrium distribution is p(x). Metropolis-Hastings (M-H) provides a way to correct a fairly arbitrary transition kernel q(x’|x) (which typically won’t have p(x) as its equilibrium) to give a chain which does have the desired target. In M-H, the transition kernel is used to generate a proposed new value, x’ for the chain, which is then accepted as the new state at random with a particular probability a(x’|x)=min(1,A), where A = p(x’)q(x|x’)/[p(x)q(x’|x)].

If the value is accepted, then the chain moves to the proposed new state, x’. If the value is not accepted, the chain still advances to the next step, but the new state is given by the previous state of the chain, x.

It is clear that this algorithm is also a Markov chain, and that for x’ != x, the transition kernel of this chain is q(x’|x)a(x’|x). A couple of lines of algebra will then confirm that this “corrected” Markov chain satisfies detailed balance for the target p(x). Therefore, under some regularity conditions, this chain will have p(x) as its equilibrium, and this gives a convenient way of simulating values from this target.

Special case: the Metropolis method

It is clear from the form of the acceptance probability that a considerable simplification arises in the case where the proposal kernel is symmetric, having q(x’|x)=q(x|x’). This is useful, as a convenient updating strategy is often to update the current value of x by adding an innovation sampled from a distribution that is symmetric about zero. This clearly gives a symmetric kernel, which then drops out of the acceptance ratio, to give A=p(x’)/p(x).

Example

The example we will use for illustration is a Metropolis method for the standard normal distribution using innovations from a U(-eps,eps) distribution. Below is a simple R implementation of the algorithm

metrop1=function(n=1000,eps=0.5)

{

vec=vector("numeric", n)

x=0

vec[1]=x

for (i in 2:n) {

innov=runif(1,-eps,eps)

can=x+innov

aprob=min(1,dnorm(can)/dnorm(x))

u=runif(1)

if (u < aprob)

x=can

vec[i]=x

}

vec

}

First a candidate value (can) is constructed by perturbing the current state of the chain with the uniform innovation. Then the acceptance probability is computed, and the chain is updated appropriately depending on whether the proposed new value is accepted. Note the standard trick of picking an event with probability p by checking to see if u<p, where u is a draw from a U(0,1).

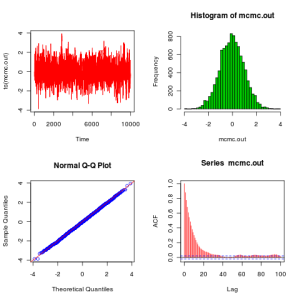

We can execute this code and plot the results with the following peice of code:

plot.mcmc<-function(mcmc.out)

{

op=par(mfrow=c(2,2))

plot(ts(mcmc.out),col=2)

hist(mcmc.out,30,col=3)

qqnorm(mcmc.out,col=4)

abline(0,1,col=2)

acf(mcmc.out,col=2,lag.max=100)

par(op)

}

metrop.out<-metrop1(10000,1)

plot.mcmc(metrop.out)

From these plots we see that the algorithm seems to be working as expected. Before finishing this post, it is worth explaining how to improve this code to get something which looks a bit more like the kind of code that people would actually write in practice. The next version of the code (below) makes use of the fact that min(1,A) is redundant if you are just going to compare to a uniform (since values of the ratio larger than 1 will be accepted with probability 1, as they should), and also that it is unnecessary to recalculate the likelihood of the current value of the chain at every iteration – better to store and re-use the value. That obviously doesn’t make much difference here, but for real problems likelihood computations are often the main computational bottleneck.

metrop2=function(n=1000,eps=0.5)

{

vec=vector("numeric", n)

x=0

oldlik=dnorm(x)

vec[1]=x

for (i in 2:n) {

innov=runif(1,-eps,eps)

can=x+innov

lik=dnorm(can)

a=lik/oldlik

u=runif(1)

if (u < a) {

x=can

oldlik=lik

}

vec[i]=x

}

vec

}

However, this code still has a very serious flaw. It computes likelihoods! For this problem it isn’t a major issue, but in general likelihoods are the product of a very large number of small numbers, and numerical underflow is a constant hazard. For this reason (and others), no-one ever computes likelihoods in code if they can possibly avoid it, but instead log-likelihoods (which are the sum of a large number of reasonably-sized numbers, and therefore numerically very stable). We can use these log-likelihoods to calculate a log-acceptance ratio, which can then be compared to the log of a uniform for the accept-reject decision. We end up with the code below, which now doesn’t look too different to the kind of code one might actually write…

metrop3=function(n=1000,eps=0.5)

{

vec=vector("numeric", n)

x=0

oldll=dnorm(x,log=TRUE)

vec[1]=x

for (i in 2:n) {

can=x+runif(1,-eps,eps)

loglik=dnorm(can,log=TRUE)

loga=loglik-oldll

if (log(runif(1)) < loga) {

x=can

oldll=loglik

}

vec[i]=x

}

vec

}

It is easy to verify that all 3 of these implementations behave identically. Incidentally, most statisticians would consider all 3 of the above codes to represent exactly the same algorithm. They are just slightly different implementations of the same algorithm. I mention this because I’ve noticed that computing scientists often have a slightly lower-level view of what constitutes an algorithm, and would sometimes consider the above 3 algorithms to be different.

Thank you so much for this and the earlier MCMC post (comparing R, C, Java and Python)! I’m trying to learn this out of textbooks which explain the theory well… but it is also immensely helpful to hear about numerical-computing pitfalls and see simple examples of “the kind of code one might actually write,” as you put it. I look forward to the rest of this series! Cheers,

Jerzy

Nice post! I like the details – majority of books and of course papers do not state such important details. I guess it is assumed that the readership should have know this via some training, but …

I’m fairly new to MCMC myself. It would be interesting to see how the code gets implemented when one already has some data in hand and a potential model. (i.e. Some kind of mixed model F= p*guassian1+(1-p)*guassian2, 50 (or some number “m”) observations, guassian1 and guassian2 “known”, how to find p?)

what’s the best way to estimate the mean, etc. if you carry out a simple MH algorithm as described? Are the R functions mean() and var() valid in this case? I have found that batchmeans bm() gives a different value for the mean than mean(). I would like to find a value for the mean and a standard error

Hello! Excellent post! Could you give a code example with a non-symmetric proposal distribution please? I am confused how to do that. Every single example I find on-line uses a normal proposal distribution. Thank you.

Thx for your post Darren, which has helped me starting to understand Bayesian inference. However, while playing today with some home brewed MCMC code, I found that the chains for the model parameters have quite some variation, even when fed with big data sets that fit the model perfectly. This is counterintuitive, I would expect the uncertainty in the fit parameters to go down with the amount of ‘noise’ in the data, eventually reaching 0. This will never be reached with MCMC because of the stochastic nature of the sample updates. I would appreciate any comment on this.

Peter

If your model is identifiable, then the posterior variance for your parameters should decrease as the amount of data increases. But obviously, there will always be some posterior uncertainty for a finite amount of data. Also, not all models are identifiable – so start with a simple model that you know is.

Sincerely I am new to this field and am on my way to submit my thesis next month. My major is mathematics and it needs some statistics and econometric stuffs. could someone help me on MH algorithm r-codes for estimating GARCH (1, 1) model.

i need metropolis hasting algorithm for model estimation in ratio type estimation in simple random sampling by generating psedu random numbers from uniform and generalized logistic distribution .model is y=theta*x+e

Is there any reference i can look up to, in order to justify the (reasonable) choice of employing log-likelihoods to avoid underflow issues?

Articles, books that explicitly justify this established practice?

Thank you.

check end of section 1.12.1 http://www.mcmchandbook.net/HandbookChapter1.pdf

This is a chapter in the following monography http://www.mcmchandbook.net/HandbookTableofContents.html

check end of section 1.12.1 in http://www.mcmchandbook.net/HandbookChapter1.pdf

This is a chapter from the following monography http://www.mcmchandbook.net/HandbookTableofContents.html

just change the starting value to

x = 100

and dnorm(x) will return 0, and then the algorithm will be in deep trouble.

Good code with nice explanations. I agree entirely with what you said, about metrop1 and metrop2 being poor algorithms. But i think metrop3 has rooms for improvement still. I copy the code with my modifications here, and then explain them below:

metrop3a=function(n=1000000,eps=0.5)

{

vec=vector(“numeric”, n)

x=0

oldll=-x*x/2

vec[1]=x

for (i in 2:n) {

can=x+runif(1,-eps,eps)

loglik=-can*can/2

loga=loglik-oldll

if (loga>=0 || exp(loga)>runif(1)) {

x=can

oldll=loglik

}

vec[i]=x

}

vec

}

here are my justifications for the changes.

first i think we need bigger samples to get anything reliable, and 100000 is too small. i usually take 10M or 100M samples (in C) but R is too slow to do that.

Second if you call dnorm(x, log=TRUE) a million times, R is probably calculating the log of (2*Pi) a million times, which is a waste of time. (This is my suspicion as I have not checked the C source code for R.) The main point about MCMC is that it does not need the normalizing constant (or its log). Here -x*x/2 is the log of the unnormalized posterior, so this makes it clear that knowledge of the (normalized) posterior density is unnecessary in the algorithm, and knowledge of the posterior density ratio (or its log), in which the normalizing constant cancels, is sufficient. A serious MCMC program will probably have the log of the unnormalized posterior written as a function.

The third change is minor. If log(a) >= 0, then a >= 1, and since a is the probability with which we accept the proposal, with log(a) >= 0 we already know we accept the proposal, so the modified code avoids a calculation of one log or exp function when loga>0. I am not sure whether exp or log is more expensive to calculate, and that line in the middle can be written as follows as well:

if (loga>=0 || log(runif(1)) < loga) {

Thank you, this is a very clear and useful post.