I’ve recently acquired a new laptop with an Intel i7 Quad Core CPU – an i7-940XM to be precise, and I’m interested in the possibility of running parallel MCMC codes on this machine (a Dell Precision M4500) in order to speed things up a bit. I’m running the AMD64 version of Ubuntu Linux 10.10 on it, as it has 8GB of (1333MHz DDR2 dual channel) RAM. It also contains an NVIDIA 1GB Quadro FX graphics card, which I could probably also use for GPU-style speed-up using CUDA, but I don’t really want that kind of hassle if I can avoid it. In a previous post I gave an Introduction to parallel MCMC, which explains how to set up an MPI-based parallel computing environment with Ubuntu. In this post I’m just going to look at a very simple embarrassingly parallel Monte Carlo code, based on the code monte-carlo.c from the previous post. The slightly modified version of the code is given below.

#include <math.h>

#include <mpi.h>

#include <gsl/gsl_rng.h>

#include "gsl-sprng.h"

int main(int argc,char *argv[])

{

int i,k,N; long Iters; double u,ksum,Nsum; gsl_rng *r;

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&N);

MPI_Comm_rank(MPI_COMM_WORLD,&k);

Iters=1e9;

r=gsl_rng_alloc(gsl_rng_sprng20);

for (i=0;i<(Iters/N);i++) {

u = gsl_rng_uniform(r);

ksum += exp(-u*u);

}

MPI_Reduce(&ksum,&Nsum,1,MPI_DOUBLE,MPI_SUM,0,MPI_COMM_WORLD);

if (k == 0) {

printf("Monte carlo estimate is %f\n", Nsum/Iters );

}

MPI_Finalize();

exit(EXIT_SUCCESS);

}

This code does 10^9 iterations of a Monte Carlo integral, dividing them equally among the available processes. This code can be compiled with something like:

mpicc -I/usr/local/src/sprng2.0/include -L/usr/local/src/sprng2.0/lib -o monte-carlo monte-carlo.c -lsprng -lgmp -lgsl -lgslcblas

and run with (say) 4 processes with a command like:

time mpirun -np 4 monte-carlo

How many processes should one run on this machine? This is an interesting question. There is only one CPU in this laptop, but as the name suggests, it has 4 cores. Furthermore, each of those cores is hyper-threaded, so the linux kernel presents it to the user as 8 processors (4 cores, 8 siblings), as a quick cat /proc/cpuinfo will confirm. Does this really mean that it is the equivalent of 8 independent CPUs? The short answer is “no”, but running 8 processes of an MPI (or OpenMP) job could still be optimal. I investigated this issue empirically by varying the number of processes for the MPI job from 1 to 10, doing 3 repeats for each to get some kind of idea of variability (I’ve been working in a lab recently, so I know that all good biologists always do 3 repeats of everything!). The conditions were by no means optimal, but probably quite typical – I was running the Ubuntu window manager, several terminal windows, had Firefox open with several tabs in another workspace, and Xemacs open with several buffers, etc. The raw timings (in seconds) are given below.

NP T1 T2 T3 1 62.046 62.042 61.900 2 35.652 34.737 36.116 3 29.048 28.238 28.567 4 23.273 24.184 22.207 5 24.418 24.735 24.580 6 21.279 21.184 22.379 7 20.072 19.758 19.836 8 17.858 17.836 18.330 9 20.392 21.290 21.279 10 22.342 19.685 19.309

A quick scan of the numbers in the table makes sense – the time taken decreases steadily as the number of processes increases up to 8 processes, and then increases again as the number goes above 8. This is exactly the kind of qualitative pattern one would hope to see, but the quantitative pattern is a bit more interesting. First let’s look at a plot of the timings.

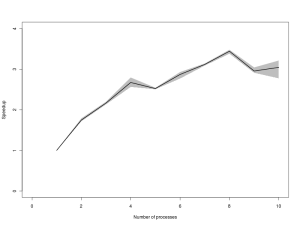

You will probably need to click on the image to be able to read the axes. The black line gives the average time, and the grey envelope covers all 3 timings. Again, this is broadly what I would have expected – time decreasing steadily to 4 processes, then diminishing returns from 4 to 8 processes, and a penalty for attempting to run more than 8 processes in parallel. Now lets look at the speed up (speed relative to the average time of the 1 processor version).

Here again, the qualitative pattern is as expected. However, inspection of the numbers on the y-axis is a little disappointing. Remeber that this not some cleverly parallelised MCMC chain with lots of inter-process communication – this is an embarrassingly parallel Monte Carlo job – one would expect to see close to 8x speedup on 8 independent CPUs. Here we see that for 4 processes, we get a little over 2.5x speedup, and if we crank things all the way up to 8 processes, we get nearly 3.5x speedup. This isn’t mind-blowing, but it is a lot better than nothing, and this is a fairly powerful processor, so even the 1 processor code is pretty quick…

So, in answer to the question of how many processes to run, the answer seems to be that if the code is very parallel, running 8 processes will probably be quickest, but running 4 processes probably won’t be much slower, and will leave some spare capacity for web browsing, editing, etc, while waiting for the MPI job to run. As ever, YMMV…

great post. I have myself an I7 processor, hackintosh I built. I did a performance monte carlo MPI study, my results are very similar to the ones I see here

Interesting. Did you try disabling Hyperthreading in the BIOS and running the code with 4 threads? I find that Matlab (R2011a, Ubuntu 64-bit) is a bit quicker on benchmarks with HT disabled. Wondering if that’s true in general of number crunching programs or it’s just a Matlab thing.

No I didn’t disable hyperthreading, but I thought about it, as it raises some interesting issues. I’m not surprised by the results you see – I would expect to see the same with my MPI jobs – there is no point wasting time trying to cleverly hyperthread when you have 4 CPU intensive threads – it makes sense that that would slow things down. However, the other potential big difference is the “baseline” speed of one thread. Presumably one thread will run more slowly with hyperthreading disabled, which will then make the multi-threaded jobs look faster relative to 1 thread, even if they are not actually any faster than when hyperthreading is turned on… This is potentially misleading, I think. But the main reason for leaving hyperthreading turned on (other than lazyness) is the same reason I ran stuff alongside a window manager, firefox, etc. – to get some idea about real-world speed-ups, rather than theoretical speedup. Most people are going to want hyperthreading turned on, just as they want to run a window manager, and I am really interested in how much faster my code can run relative to my single process hyperthreaded job… Thanks for your feedback.

I see. We have 4 i7s with similar configurations here and initially I had disabled HT on just one. The benchmarks looked a bit better and I didn’t see adverse consequences in everyday use (Matlab, Python, Firefox, NX and so forth), so I disabled HT on all 4 after a few months. Haven’t switched back.

I’ve never used the RNGs in the GSL so sorry if this is a stupid question but how have you handled seeding the generator. Is each thread definitely getting its own, independent random number stream?

In a nutshell, yes. But this functionality is not directly provided by the GSL. I’m actually using SPRNG to generate independent streams on each thread, then wrapping each one up as a GSL generator so that I can use the GSL non-uniform generation functions with the SPRNG streams. See my post on getting started with parallel MCMC for further details: https://darrenjw.wordpress.com/2010/12/14/getting-started-with-parallel-mcmc/

Very nice post. Strangely, there’s hardly any documentation on how to run MPI applications on multithreaded processors, and your post thows proper light on it.

A few comments: Since your problem is being strong scaled, it might be well worth running it with a larger problem size, or maybe abandon this altogether and make it weak scale. The reason is that since we want to see whether the extra threads in the processor function as extra MPI processes (and we don’t know the details, so we can only guess), we need to ensure that we distribute the load equally across these cores.

I would suggest putting in N particles in each core rather than dividing up N amongst 8. I am actually going to take the liberty of lifting your code and running it on my cluster, with N MPI tasks in a hyperthreaded environment, vs N/2+N/2 tasks in non-hyperthreaded nodes.

Finally – and no, I speak with the integrity of a man married to science – I can tell you with absolute certainty times appropriate speedup – and which you probably know only too well, that MC codes will be trodden underfoot by GPUs. I am currently working with a monstrously large QMC code, and it’s pretty hard even to get the runs to complete in a reasonable time in their pure MPI+OPenMP avatars.

Cheers, and keep the good posts coming!

I’ve decided to let your comment through rather than flagging as spam, as it does contain some content. However, I note that your IP address is within the NVIDIA domain, and I consider it very poor form not to declare a competing interest in a comment of this nature. But then that sums up what I think about NVIDIA and their marketing practises.

I had no idea that an innocent comment of mine would be taken as a marketing gimmick.

Hi Darren: I am actually very curious in knowing what happens if you weak scale this problem by changing 10e9/N to 10e9 in the for loop (so that you will be having a total number of particles that scales with N).

If you are working on your laptop, what made you choose MPI over OpenMP? I’m about to start writing a package for R and am trying to decide which to use. Cheers.

The main reason is that MPI has the potential to scale up to clusters of workstations – I have used MPI successfully on 16-node Beowulf clusters in the past. If you are really just looking for some speed-up on a single machine, OpenMP is probably fine.

ok thanks Darren, I see your point. Have you ever written an extension for R in C that uses MPI? I have written some code in C which I call from R that uses OpenMP because you just compile it like normal C code with the -lomp library, but with MPI you need to use mpirun etc and I’m not sure how this would work with when trying to call it from R. Naturally when I search for R and MPI all I get is the Rmpi package which isn’t what I’m after. Have you tried to do this?

The short answer is “no”. But I’m not really sure it makes sense to want to link a multi-process MPI problem into a running R process. You probably either want a distributed solution, using “snow” or “Rmpi”, or a system call to fire up a bunch of MPI processes using “mpirun”. Alternatively, your OpenMP solution may be exactly what you want!

I speak with the integrity of a man married to science – I can tell you with absolute certainty times appropriate speedup – and which you probably know only too well, that MC codes will be trodden underfoot by GPUs. I am currently working with a monstrously large QMC code.

Paket Pulau Tidung

I would suggest putting in N particles in each core rather than dividing up N amongst 8. I am actually going to take the liberty of lifting your code and running it on my cluster

Travel Teluk Kiluan Murah

Hello Darren,

I ran your code and optimized for Python…. doing the same calc.

u = gsl_rng_uniform(r);

ksum += exp(-u*u);

I have this result for 10^9 iterations, on I7 Laptop 8Go ram (Asus) dual Core:

4procs,1000000000 iter,2500 chunks,— 12.205698013305664 sec —

4procs,2000000000 iter,2500 chunks,— 23.793360948562622 sec —

1procs,2000000000 iter,2500 chunks,— 49.759846210479736 sec —

Which is X2 faster than your C++ code….. (am myself surprised)